Might May Not Always Make Right; but it Often Wins

By Keith Devlin @KeithDevlin@fediscience.org

The front cover of the 1989 book by Jon Barwise and John Etchemendy in which they provide a resolution of the classical Greek “Liar Paradox”. See the article for details.

The interest and debate started by the release into the wilds of the Large Language Model ChatGPT (the GPT stands for “Generative Pre-trained Transformer”, by the way) brought back memories of when I migrated across to the US from my native England back in 1987, to join a large and growing team of international researchers working at Stanford University’s Center for the Study of Language and Information (CSLI).

Using computers to process and (so some dreamers imagined) understand everyday language was just getting off the ground as a global research topic that blended computer science with linguistics. Since mathematics plays a role in both those disciplines, I was invited to join the team. I ended up extending my one-year stay to two, and, with the goal of staying as closely involved with that research as possible, remained in the US afterwards, to be better able to continue as an active member of the CSLI team, and returned full-time to the Center as Executive Director in 2001.

CSLI enjoys its 40th birthday this year, being founded (with $23M of initial funding) in 1983. The main thrust of the research thread on “natural language processing” (NLP) back then was taking existing – or developing new – theories of syntax and semantics and try to fold enough of a mathematical skeleton so engineers could make the linguistic theories work in a computer program. [There were a number of other research initiatives going on at CSLI at the same time, most of which I had no connection with, until I returned as the Executive Director.]

The particular NLP research thread I just referred to produced some useful results. Automobile manufacturers were interested and supportive from the start, and many of us now drive cars with speech processing systems that began with the kind of research done at CSLI and elsewhere. We also use such systems when we speak into a remote to change TV channels. But in the end, developing a scientific and mathematical understanding of natural language was not the way NLP went.

A few hundred feet away from CSLI, two young computer science graduate students were beavering away on their masters project to find a way to make library searches more efficient, given that personal computers were becoming cheaper and more powerful and increasingly being networked. As things worked out, they were helping to open the path that would eventually lead to language processing like ChatGPT.

As Terry Winograd, my CSLI colleague (and the advisor of the two students) in the Computer Science Department told the story, when he asked them how they were going to test the new algorithm they had developed, they replied, “There’s this new commercial network called the World Wide Web being built on top of the Internet; we thought we’d just let our algorithm loose on that.”

Which is precisely what they did. It worked. Better than anyone expected. And within a very short time (looking back, I am no longer sure if it was days or weeks, this was all back in the late 90s), the campus was abuzz with news that a couple of young graduate students had found a way to find information quickly and effectively on the Web. Not long afterwards, the rest of the world would hear about it too.

When Stanford, which had taken out a patent on the new invention, tried to license the new search product to one of the new Web companies that had been springing up around the campus, none were interested, so the university handed the patent over to the two students, who promptly founded a company they called Google.

I recognized the name at once as coming from the word googol, long familiar to mathematicians as the name for the number you get by writing a 1 followed by 100 zeroes. The word was made up in 1920 by nine-year-old boy called Milton Sirotta, whose uncle was the American mathematician Edward Kasner. At the time, Kasner was working with James Newman on a popular mathematics book that would be called Mathematics and the Imagination, first published in 1940. He asked his nephew to think of a name for the number 10 to the 100th power that he wanted to use in the book.

After Google the company was formed, it took me quite a while to become familiar with their spelling; it just looked plain wrong to someone like me who had known the “real” spelling since I had read Kastner’s book as a teenager. Today, the company name looks to me like the correct way to spell it.

In the same book, Kasner and Newman introduced another big number — one so large that it cannot even be written. (This was definitely the stuff to arouse the interest of young kids with a math bent.) That number was 1 followed by a googol zeros, which they called the googolplex. Google, the company, maintained that spelling for the name they gave to the headquarters building when they built their new campus in Mountain View, CA: The Googolplex.

But I digress – though only partially, since this essay is all about the power of large numbers. One of the downstream effects of the growth of the Web and the ability to search it, was it opened up a new era for NLP; instead of building systems on scientific theories of language, as the early NLP researchers at CSLI were doing, use pattern-matching techniques over vast databases of language generated by humans. Such databases were being created and grown all around the world, as more and more people found their way on to the Web.

As mathematicians, that approach would still give us a warm feeling that we were continuing to be useful to society. We were just handing the NLP reins to those math-consuming statisticians and digital-systems engineers to connect the requisite mathematics to the world.

Sure, being a statistical approach, it meant that, instead of the systems producing theory-backed accurate results every time, society would get a system that would be good for the majority of people the majority of the time. And the bigger the systems got, and the more data that became available, the better the performance would become, with a smaller and smaller percentage of users not getting what they wanted (or needed).

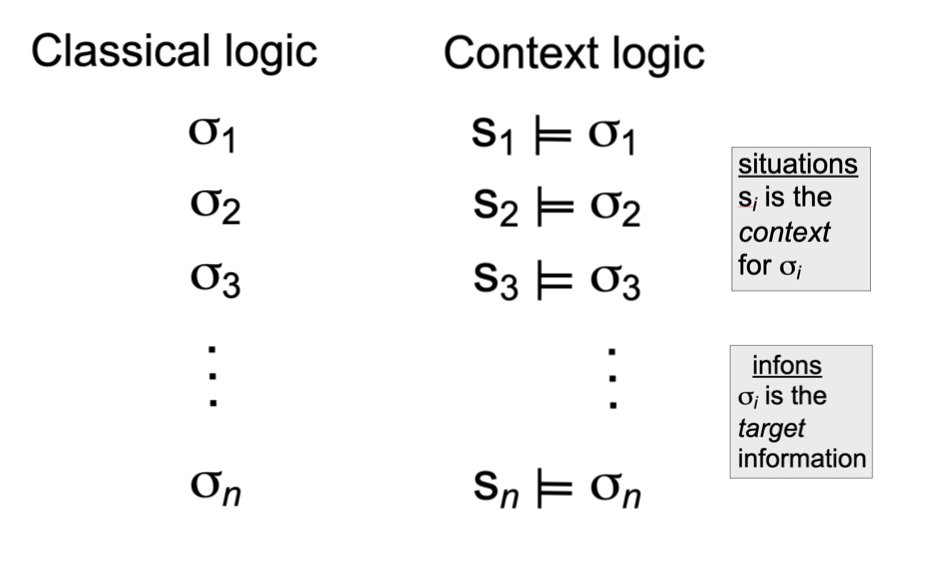

Two kinds of deductive chains. Using Situation Theory as an underlying framework, it’s possible to develop a “context-sensitive logic” that keeps track of the contexts where information is generated or received. Situation Theory was inspired in part by the subdiscipline of mathematical logic known as Model Theory. See the book Logic and Information (Devlin, K., 2001) for details.

As for me, not being into statistics (at least not in a big way), I took my toolbox and peddled my CSLI experience to industry and various government agencies dealing with intelligence analysis, where it is crucial to be aware of, and take account of, not just what is written or said (i.e., the signal, which is what statistical NLP systems work on), but also the specific context where information is produced and utilized. (I alluded to that work in the last couple of Angle posts.)

One of the coolest illustrations of what you can achieve when you include context in your mathematical reasoning is provided by Barwise and Etchemendy’s 1989 resolution of the classical Greek Liar Paradox. Their proof made us of the new mathematical framework provided by Situation Theory, which made explicit the contexts in which assertions were made.

I wrote about that result in Devlin’s Angle back in November 2003, where you will find a simple account of Barwise and Etchemendy’s proof.

See also this this review of the Barwise-Etchemendy book by Larry Moss in the Bulletin of the AMS. He examines their proof in more depth from a philosophical perspective.