Does AI Pose a Threat to Mathematics Education?

By Keith Devlin @KeithDevlin@fediscience.org

A Teacher Appreciation Week message to teachers from the NEA, posted on their website in April 2023.

National Teacher Day in the US takes place on Tuesday May 2 this year. Many countries celebrate their Teachers' Day on 5 October each year, in conjunction with World Teachers' Day, which was established by UNESCO in 1994, but the US is not alone in setting a different date. (Massachusetts apparently sets the first Sunday of June as its own annual Teachers' Day.)

The US choice of May for Teacher Day is to coincide with Teacher Appreciation Week, with activities organized by the National Education Association. Given the current assault on education at all levels by some US political factions, the apolitical mission of this blog leads me to avoid discussion (here) of the history and activities of Teacher Appreciation Week and of National Teacher Day (NEA is a labor union for schoolteachers, and both unions and teachers are currently under assault), but Wikipedia is a good source for both.

In my own case, most years I am reminded of the various upcoming Teacher Days around the world by receiving an email or two from school or university students who ask me to send a brief email to their favorite math teacher/professor; which I am happy to do, as I just agreed to do – hence this blog opener. (I get at most one or two a year; if this post goes viral, I likely won’t be able to oblige in future, unfortunately. But virality for Devlin’s Angle usually only occurs when I comment on K-12 mathematics guidelines.)

Talking of teachers, there’s been a lot of talk of late about GPT (Generative Pre-trained Transformer) systems like OpenAI’s ChatGPT, and the possible effects of that technology on education.

For humanities subjects, such systems are already stirring the educational waters, since they typically return a B-grade essay on demand, though the tests I’ve run have produced only essays that look like they were written by someone who really doesn’t understand what the text means. Which of course is true. They operate at the level of syntax, not semantics.

In mathematics, the “threat” such systems pose to education are significantly less. Any math test designed to be an effective assessment in the era of systems like Wolfram Alpha is not going to be gamed by a Large Language Model (of which GPTs are one kind of example).

Fig 1. ChatGPT has no understanding of the symbolic strings it processes, it operates primarily on probability distributions for what will come next. Humans are “hard-wired” to ascribe understanding (and a whole lot more) to any agent that interacts with us using language. It’s not that those systems produce intelligent answers; rather they produce answers that we automatically receive as coming from an intelligent agent. That’s what they are (implicitly) built to do. But it is we who provide the understanding and possibly ascribe the system with conscious agency.

A simple test run by my daughter, formerly a Google employee, demonstrated that ChatGPT not only does not have basic number sense, it happily states mutually contradictory facts. (Figure 1)

The problem with all AI is that those systems do not, and cannot, understand in the sense we humans do. Don’t blame them for that. We can’t even provide ourselves with a good explanation of what we mean when we say we understand something. But we surely do know what we mean.

That doesn’t mean AI systems are not useful. They assuredly are. For one thing, symbolic mathematics products such as Mathematica, Maple, Derive, and Wolfram Alpha are all AI systems (of a special kind); the car I drive is an AI system on wheels; and my kitchen is filled with appliances that have AI. And I am sure much the same is true for you.

But AI is, as the name says, artificial intelligence, which is not the same as human intelligence; likewise, machine understanding is not the same as human understanding, and machine learning is not the same as human learning. To confuse any of those pairs is a fundamental category error.

An appropriate linguistic analogy for terms like “artificial intelligence” and “machine understanding/learning” would not be “red car” (a kind of car) but “counterfeit money” (something that looks superficially like money, but isn’t appropriately connected to societies or their financial systems, and hence is not money at all).

With regard to education, I could put some flesh on the above remarks, but a recent article in the Washington Post does a good job of conveying the key points I would make.

It’s too late now to undo history, but that whole era when engineers put “artificial” in front of various human capacities for which new technologies performed some aspects of some functions in common with people, was misguided. We don’t drive “artificial legs” we drive “automobiles” (or even better “cars”), we ride “bicycles” not “artificial horses” (and likewise “cycling” is a far better term than “machine walking”) for two of the engineered products we use to get us from place to place faster and with less personal energy expended, than if we walked. (Yes, for a period our grandparents drove “horseless carriages” but our smart ancestors soon found native terms for those new, four-wheeled, ICE-propelled, mobility devices.)

Anyone who thinks a LLM comes even remotely close to human language use needs to take a university course on sociolinguistics, and likewise, anyone who thinks an AI system can understand language as we do should study ethnomethodology. For humans, language use is social-social-social all the way down (most likely bottoming out in the quantum domain, which is possibly where consciousness comes from).

Figure 2. Some of the mathematical tools available today. See my Devlin’s Angle post for February 2018.

Of course, AI systems do some things better than we do. So do my car and my bicycle. And just as my car and my bicycle expose me to risks I would not have had if I were just walking to get around, AI systems expose me to risks (some of them highly significant) I would not have faced were there no such systems. Neither is better than the other. They are different. The power and benefits of those technologies comes from using them in a symbiotic way.

Meanwhile, I expect that tools such as ChatGPT will soon be an accepted part of the technological toolkit today’s mathematicians use (See Figure 2). We just have to learn how to make safe, optimal use of them.

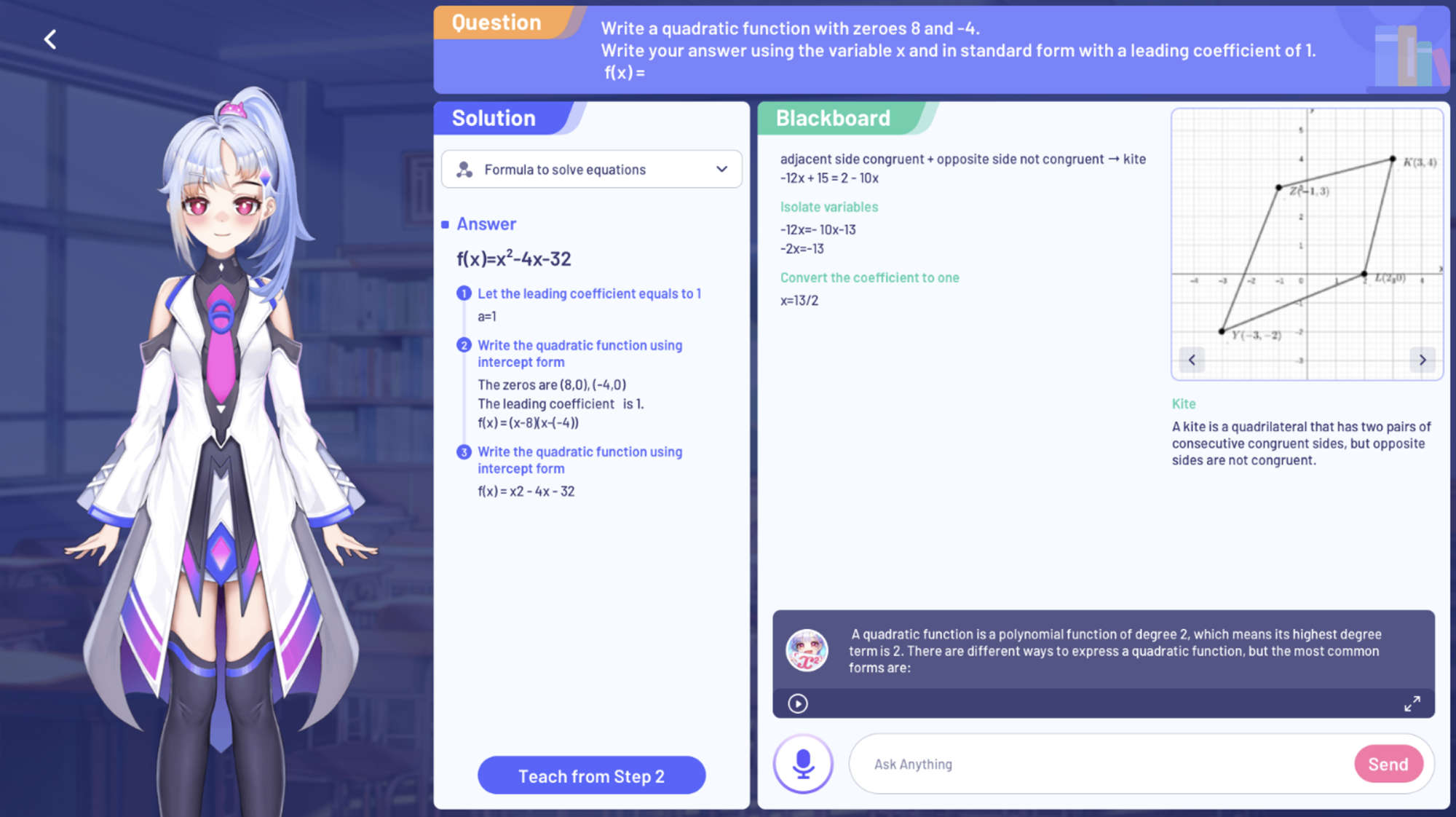

The first support tool for mathematics education that employs a GPT appears to be on the near horizon. See Figure 3.

From the looks of it, it appears to be just a slick delivery system for the “getting appropriately targeted math facts in front of students’ eyeballs” ed tech model, which doesn’t excite me, and has all the educational failings cited in the Washington Post article refenced above.

But I don’t see it as inherently more damaging than any other electronic math-facts delivery system, and it may well turn out to be one of the best.

If it doesn’t, it won’t be for lack of funding for development. The Singapore-based startup company producing it is already valued at $100M, and is currently looking for major backers based on that valuation.

Fig 3. Tutor Eva, from Singapore-based startup Higgz Academia Technology Pte, was launched last July. The company is currently valued at $100M and is seeking major funding to incorporate ChatGTP. See the article in Bloomberg News.

I’m not motivated to test it out in depth, since I don’t think that’s remotely the best way to teach students just starting out on their educational journey. (Again, that Washington Post article explains why I think that.) But it looks sufficiently well thought out and executed to merit me using it as an example of where I think that technology is heading.

I definitely see a valuable role for interactive technology in mathematics education. Indeed, I co-founded my own educational technology company a few years ago to do just that. It’s based on video-game technology, which long ago became passé, and it requires thinking to make progress (the player finds the answer; the game provides visual assistance, but it won’t simply deliver the answer or the steps needed to be taken to get there). So we’re not in the market for $100M-level funding. We just want to provide students with a simple tool that can help them. You can read about the pedagogy behind our approach in this book, if you can find a copy at a fair price (or get it through your local library), or you can get some of the information piecemeal on the Web for free here.